Why So Slow? Blame Jevons Paradox, Bufferbloat And Cruft

A recent article lead to me to read more about an interesting idea called Jevons Paradox. William Stanley Jevon postulated that “technological progress that increases the efficiency with which a resource is used tends to increase (rather than decrease) the rate of consumption of that resource”. This fundamental idea generally runs counter to the thought that, when you increase the efficiency of something by way of technological means, the consumption of that resource should go down. It is an idea that has often been overshadowed by more famous theories like “Moore’s Law“, but is no less fascinating when applying it to computing and IT infrastructure.

The question is, how do we know that Jevons Paradox may be true? One great example is the perception of computing speed. A commonly overlooked part of every IT infrastructure is the printing process. Take a step back to the early nineties and think of (if you can remember that far back) what it might have felt like to print from a DOS application like Wordperfect. If the driver you needed was setup and working – you would hear the print job starting almost instantly. Contrast this with today’s massive operating system stacks with standard driver models and spooling capabilities. What you’re left with is more options and power – but the print job you just started takes a lot longer than “instantly”.

This fight with perception is a constant struggle for those in the IT industry. The millions of lines of code that go into today’s operating systems grind to an almost instant halt when a process sits at a 100% CPU usage, and the computer is immediately perceived to be “to slow”. The user is then left to explain that rather quizzically “it doesn’t make sense, this is a new computer”.

The ongoing march to better, more efficient and cheaper storage has given way to incredible advances in optical and magnetic technologies. We have things like RAID and NAS that give us a near impossible-to-think-of amount of storage. In 1995, I spent a good portion of my time sorting out and removing unneeded files on my 200 MB hard disk; today, I do the same on my 2,000 MB hard disk. Both, near full capacity. It’s amazing that, as we are able to process and store more, we’re also using more.

Fast switches create Bufferbloat

Most of us have a great deal of bandwidth available to us compared to what was possible when modems ruled. The one core function of speed that seems to have taken the biggest hit is that of latency (defined as simply the delay or the time a packet takes to get to you). In fact, for many years latency has been described as the main issue in networking, not bandwidth.

This all leads us to the improved switching devices we use on gigabit networks, but what’s happening is the buffering of packets inside these devices aren’t tuned as well as they could be, causing what’s been coined as Bufferbloat. The increasing use of buffers to handle packets and congestion applications leads to a slowdown where there shouldn’t logically be one.

Better Computers, Better Cruft

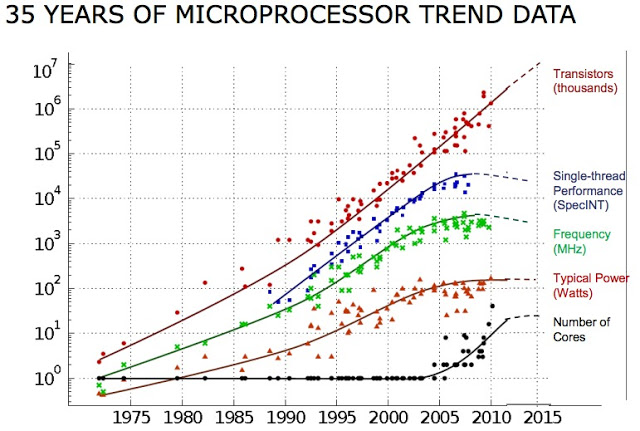

|

| Source: lanl.gov |

Our computers are better too, we’re using processors that have multi cores and can easily handle some of the most intensive processing tasks. So then, why do computers not feel many times as fast than they did when DOS applications were the norm? Surely the perceived speed of basic tasks would stay at least constant as computing improvements are made? If you weren’t blessed to have used DOS back then, take a look at this short video of an apparent 486 computer running a few DOS applications. Pay close attention to the responsiveness of the system to keystrokes and the speed that applications load once invoked. Compare that to loading any larger application on a Windows-Based computer today.

There are many examples of this paradox in practice. The more advanced and powerful computers are getting, it seems the utilities that load them down are getting more and more advanced too. I have seen a number of computers that are exponentially more powerful than the Apollo Spacecraft, but are moving no faster than a hobble when loaded down with all sorts of virus scanners, spyware and innocuous utilities. It seems more and more that useless stuff is lingering in computers. What’s this useless stuff that tends to slow us down? Well, it’s called cruft.

Here’s another way to look at cruft. Take an Apple iPad tablet after a user has owned it for a couple years. That user has likely downloaded and installed hundreds of apps, removed others and generally just left the rest he or she wasn’t sure about. You’ll find that “paid” apps will also linger on iPads long past their usefulness. When the user first purchased the iPad, the YouTube app (for example, because of it’s wonderfully bland icon) would be found immediately. But, after years of use and app installations, trying to find YouTube in any reasonable amount of time would be very difficult for our poor user. Countless flips back and forth through pages, opening and closing of folders and a final Spotlight Search because the user gives up. That’s Cruft.

So where does this all lead us? Is all this leading us down the path of greater simplicity instead of making the product more complicated (to accommodate cruft)? It seems entirely possible that, in the near future, we may see self-organizing systems force the removal of applications that have not been used for a specific duration or were not explicitly requested.