Updating Docker containers safely

For anyone who runs one or more docker applications, a huge challenge is to keep containers running smoothly with updated images. You could force updates with tools like Watchtower, but these may just automatically break your applications. Here are some of the ways I make sure docker applications stay up-to-date while failing as little as possible. This only covers docker run/create and docker-compose (for now). Here’s what I do.

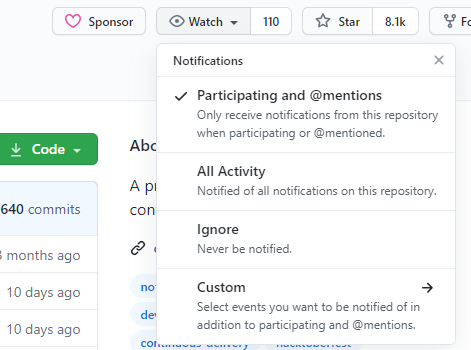

Watch the Github Page

As I’d learned to face that updates were breaking things too often, it was clear I’d need a better strategy for keeping track. For just about every DOcker image out there, you find a corresponding versioning page. The most common is Github, but you can replace this advice for your tool. These versioning tools all tend to have a “watch” command that lets you keep track of activity in the project.

When you get an email about new activity or release, go look at the Github update, changes to the project and possibly even communications that have happened around the changes. These will all help you determine if the update is minor, or if you need to take more serious action because a big change is coming. These details are the best way to protect from the “update and kill your container” cycle.

Use a tool, but read-only

Very early in my use of Docker, it was clear that letting a tool auto-update containers was bad. Really bad. Like, destroy your system badly. This may be the recent change of Bitwarden_rs to Vaultwarden that required you to manually attend to some naming changes. it may have also been an image that updates to no longer support an important feature. Changes in docker images, more and more, need to be handled safely.

The first tool I used was the popular watchtower. It checks all the images for something new and updates. But, given that you want to monitor and be under control, the best approach is to run it with the WATCHTOWER_NOTIFICATIONS=email environment variable (with your coordinate set). Then, in your run or create, be sure to start the container with the –monitor-only switch. Watchtower was great, but it was running from the command line, and I was missing email messages. I looked for something better.

Then I found diun (Docker Image Update Notifier). This tool did many of the same things that Watchtower did (although din only watches), but it supported notification via Discord (a popular chat service). I could then hook this into my own Discord server and have these notify me push via my phone. Another added benefit was that I’d have no issues placing this in a docker-compose.yml file, making updating the tool itself easier. I’ve been using diun for awhile and this is my default right now.

Convert to docker-compose

Early on with Docker, I used docker create and docker run statements to get containers working. These were fine, simple, and provided all the information needed right there n the command line. At the start, thinking of docker-compose seems messy. As I continued to use “run” statements, I started building scripts to update the underlying containers. As you might imagine, the script started to grow in size as I needed to keep track of more containers. Here’s a section of my script that updated freshrss:

"freshrss") echo "Updating freshrss Docker Container..." echo "Stopping $1..." docker stop $1 echo "Removing $1..." docker rm $1 echo "Creating new container..." docker create \ --name=freshrss \ -e PUID=1000 \ -e PGID=1000 \ -e TZ=Canada/Eastern \ -p 8087:80 \ -p 8086:443 \ -v /data/rss:/config \ --restart unless-stopped \ linuxserver/freshrssecho "Starting $1..." docker start $1 echo "----Log Start ----" echo "------------------" docker logs -f $1 ;;

The challenge here was that the create/run was getting a little large and prone to errors. I was starting to keep multiple copies of these statements in different locations. I’d have a couple of cases where I had to get the correct “create” statement from my terminal command history. This, too, wouldn’t help as I started to expand into applications (such as TT-RSS) that made use of multiple images and multiple containers. It was getting a little crazy.

With docker-compose, these scripts were already done for me. I’d just have to make sure a docker-compse.yml file was in the data volume on my server and then I could just run docker-compose up -d and the container would be recreated.

Bring it all together

Truly, I wanted to automate this as much as possible while having only one place (besides the backup) where my container parameters would be kept. With a basic, clear data\docker-compose.yml structure, I’d be able to update multi-container applications with just one command:

cd /data/ttrss && docker-compose pull && docker-compose up -d && docker-compose logs -f

The added benefit here is seeing logs for all the containers together as they start up. Truly as good as it gets right now. I’d be interested to see what you’re doing to keep track of image updates and how you manage your containers.