Fun with Discourse on Docker

Discourse is a popular forum tool available as a self-hosted open-source tool. It can be installed bare-metal on Linux, but the Docker installation is one that I found most interesting. Unlike most Docker-implemented tools, Discourse has a script that runs outside of the docker containers (on your base machine) to set up the tool. This monolithic script (./discourse-setup) seems to do many of the things that docker-compose does, but obviously worse. I’m no fan of doing things this way; especially when the base alternative is nowhere on their site. My challenge this time was to build a basic docker-compose.yml that would get Discourse running in a test environment. Here’s what I did.

Damn, everything about how Discourse is setup in Docker is completely broken. These guys are making it way too difficult to run this stuff. Stupid domain validations and probably more. Just offer a way to build a docker run statement at least – https://t.co/7rrxlhBcxS #brutal

— Kevin Costain (@cwlco) November 23, 2020

I should mention, I do imagine the ./discourse-setup script works for most, but there are some that can’t just have the whole process taken out of the native Docker environment. For example, setting up a server that already has ports 80 and 443 used by a reverse proxy, the script will fail on the first check and stop. There is no local test environment this thing can run in. The image seems to come with everything needed (including a reverse proxy), but what if you wanted to understand how it can be integrated into your existing environment? This script won’t help you quickly bootstrap things (even though they seem to think it can).

First steps

Naturally, the first steps are to get Docker installed on a Linux machine. I’ll leave that to you, but one thing I did want to mention is Unbuntu 18.04. If you’ve selected the install-based Docker, it will be installed as a snap. This has to be removed with the command snap remove docker, and then Docker and docker-compose installed normally as per their site.

Pull the images

Discourse is a monster of requirements. Far more than I expected a forum would expect, but for this, you have no choice in the matter. Make sure you have lots of RAM and drive space for this stuff and just start by pulling all the images (docker-compose would do this later, but this pre-stage can be done too). I’m going to pull the images available from indiehosters. I’m not sure what’s different from the official docker, but that’s another test. Run these and get coffee.

# docker pull indiehosters/discourse # docker pull indiehosters/discourse-web # docker pull postgres # docker pull redis

Edit and construct the docker-compose.yml

I present the full docker.compose.yml file that worked for me. Click here to see it in raw text format. It is also reproduced below:

version: '2.1'

networks:

lb_web:

external: true

back:

driver: bridge

services:

web:

image: indiehosters/discourse-web

volumes:

- /data/assets:/home/discourse/discourse/public/assets

- /data/uploads:/home/discourse/discourse/public/uploads

environment:

- VIRTUAL_HOST

networks:

- back

- lb_web

ports:

- "80:80"

depends_on:

- app

app:

image: indiehosters/discourse

volumes:

- /data/assets:/home/discourse/discourse/public/assets

- /data/uploads:/home/discourse/discourse/public/uploads

- /data/backups:/home/discourse/discourse/public/backups

environment:

- DISCOURSE_HOSTNAME=ubuntuvm.ecwl.cc

- POSTGRES_USER=discourse

- DISCOURSE_SMTP_PORT=587

- DISCOURSE_SMTP_ADDRESS

- DISCOURSE_SMTP_USER_NAME

- DISCOURSE_SMTP_PASSWORD

- DISCOURSE_DB_PASSWORD=Password123

- DISCOURSE_DB_HOST=postgres

depends_on:

- redis

- postgres

networks:

- back

sidekiq:

image: indiehosters/discourse

command: bundle exec sidekiq -q critical -q default -q low -v

volumes:

- /data/assets:/home/discourse/discourse/public/assets

environment:

- DISCOURSE_HOSTNAME=ubuntuvm.ecwl.cc

- POSTGRES_USER=discourse

- DISCOURSE_SMTP_PORT=587

- DISCOURSE_SMTP_ADDRESS

- DISCOURSE_SMTP_USER_NAME

- DISCOURSE_SMTP_PASSWORD

- DISCOURSE_DB_PASSWORD=Password123

depends_on:

- redis

- postgres

networks:

- back

postgres:

image: postgres

volumes:

- /data/postgres:/var/lib/postgresql/data

networks:

- back

environment:

- POSTGRES_USER=discourse

- POSTGRES_PASSWORD=Password123

redis:

image: redis

command: ["--appendonly","yes"]

networks:

- back

volumes:

- /data/redis:/data

It’s a lot. For those coming into the DOcker world looking at that feeling overwhelmed, don’t fret. This is doable, and you can understand it.

Setup the environment

To have the above compose file work, there is a basic environment you have to set up for it. First, create a place for the data. In my above example, I just created /data. You can put it anywhere and change as you wish. This thing needs lots of folders, so make them all in one command:

# mkdir -p ./data/{assets,uploads,backups,redis,postgres}

Then set group and user permissions on all those folders. Postgres seems to favour the user and group 999, while the rest will run in the context of user 1000. For our test, we’d just set very permissive options so this isn’t a local issue. Do these using the standard chmod and chgrp commands.

With that, networks in Docker will need to be created. At this point, they aren’t going to do anything but keep the process moving. I continue to use the labels in my above example:

# docker network create lb_web # docker network create back

Now, you can create the docker-compose.yml file. I’d generally keep it in a place that stays with your stuff, like in /data, but you can put it anywhere. Create the file and paste my example from above into it.

/data# nano docker-compose.yml

You’ll want to edit things like passwords, users and perhaps the email servers. Most of that is self-explanatory in the docker-compose.yml file (or do nothing and let it be). Now, it’s time to tell docker-compose to set up the containers. This first command is going to fail, but it’s a start. Run this:

/data# docker-compose up

You’ll see lots of logging and a number of errors. That’s ok, just get out of this with CTRL+C and docker will shut down the containers. Next is to get the database right by running a command to rebuild it. The output of this command will move fast and take a few minutes (the “app” here assumes my above example labelling) :

/data# docker-compose run --rm app bash -c "rake db:drop db:create db:migrate"

The next process will involve a process called “Precompiling the assets.” This is an internal thing and seems to be related to the Ruby structure. I don’t attest to knowing what the hell is happening that requires this, but it will spit out and decompress a bunch of javascript files essential to Discourse:

/data# docker-compose run --rm app bash -c "bundle exec rake assets:precompile"

Now, you’re ready to load the entire stack (and use the -d) to run it in the background:

/data# docker compose up -d

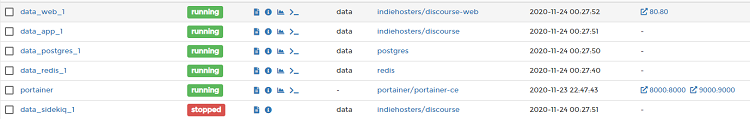

All goes well, and you’ll have all the required containers loaded and the “data_web_1” will be serving out on port 80. I’d suggest for the sake of speed to go install Portainer locally (if you don’t have it). This will give you quick access to the most common functions needed. The full running stack will look like this:

Loading the page located at http://ip.of.your.server will yield the start page of Discourse, which looks like this:

Having it installed, you can now mess with it, understand what components are useful and, perhaps the most important thing, understand how it might fit into your current infrastructure. No environment is the same, so this will help you understand if the tool is a massive hog on resources or is even worth running. The complexities of setting up Discourse seem to defeat the purpose of Docker. It should be portable and clear enough to deploy in multiple environments.

A note: This process is based on version 19.03.13 of Docker, version 1.27.4 of docker-compose, and 2.3.20 of the indiehosters/discourse image. I know things will change and very well might invalidate some or all of what I’ve described here. Updates will happen, but outside of that, my hope is that parts of this article might help form parts of information that help in the future.